需求是小日志量下,通过filebeat收集多套日志,发送到logstash的5044端口,通过logstash进行分离清洗,然后分别建立索引,好了上配置文件。 日志格式如下 日志1:2018-06-1403:03:04|tj1-sre-te

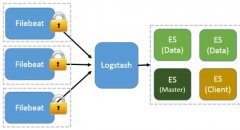

需求是小日志量下,通过filebeat收集多套日志,发送到logstash的5044端口,通过logstash进行分离清洗,然后分别建立索引,好了上配置文件。

日志格式如下

日志1: 2018-06-14 03:03:04|tj1-sre-test-glx.kscn --> c3-im-ims31.bj|0|5 2018-06-14 03:03:04|tj1-sre-test-glx.kscn --> c4-im-ims01.bj|0|6 2018-06-14 03:03:19|tj1-sre-test-glx.kscn --> lg-im-ims01.bj|0|5 日志2: account|2018-06-14 17:45:12|job.passport-support_service.passport-support_cluster.aws-sgp|3f617c5f|leijinyan feed|2018-06-14 18:18:21|job.news-recommend-service_service.news-recommend-service_cluster.c4|224e27f5|zhangxinxing im|2018-06-14 18:22:06|job.pms_service.pms_servicegroup.mipush_cluster.c4|c05a03b1278a99a2c2ea81ab6ef05a510079e752|yuyuanhe

fileteat的配置文件

filebeat.prospectors: - type: log enabled: true paths: - /home/work/log/pping.log fields: service: pping_log tail_files: true - type: log enabled: true paths: - /home/work/log/search_deploy_stastic_data fields: service: search_deploy_stastic_data tail_files: true .............. output.logstash: hosts: ["1.1.1.1:5044"]

logstash的清洗配置

input {

beats {

port => 5044

}

}

filter {

if [fields][service] == 'pping_log' {

grok {

match => ["message","%{DATA:logtime}\|%{DATA:std}\|%{NUMBER:loss}\|%{NUMBER:delay}$"]

}

date {

match => ["logtime", "yyyy-MM-dd HH:mm:ss"]

target => "@timestamp"

remove_field => ["logtime","message","[beat][version]","[beat][name]","offset","prospector","tags","source"]

}

mutate {

convert => [

"loss" , "float",

"delay" , "integer" ]

}

}

if [fields][service] == 'search_deploy_stastic_data' {

grok {

match => ["message","%{DATA:service}\|%{DATA:logtime}\|%{DATA:job}\|%{DATA:key}\|%{DATA:operator}$"]

}

date {

match => ["logtime", "yyyy-MM-dd HH:mm:ss"]

target => "@timestamp"

remove_field => ["logtime","message","[beat][version]","[beat][name]","offset","prospector","tags","source"]

}

}

}

output {

if [fields][service] == "pping_log" {

if "_grokparsefailure" not in [tags] {

elasticsearch {

hosts => ["1.1.1.1:9200"]

index => "logstash-ppinglog-%{+YYYY.MM.dd}"

}

}

}

if [fields][service] == "search_deploy_stastic_data" {

if "_grokparsefailure" not in [tags] {

elasticsearch {

hosts => ["1.1.1.1:9200"]

index => "logstash-search_deploy_stastic_data-%{+YYYY.MM.dd}"

}

}

}

}“运维网咖社”原创作品,允许转载,转载时请务必以超链接形式标明文章 原始出处 、作者信息和本声明。否则将追究法律责任。http://www.net-add.com

©本站文章(技术文章和tank手记)均为社长"矢量比特"工作.实践.学习中的心得原创或手记,请勿转载!

欢迎扫描关注微信公众号【运维网咖社】

|

社长"矢量比特",曾就职中软、新浪,现任职小米,致力于DevOps运维体系的探索和运维技术的研究实践. |